Do We Still Need Accessible Front-Ends When AI Can See for Us?

November 6, 2025

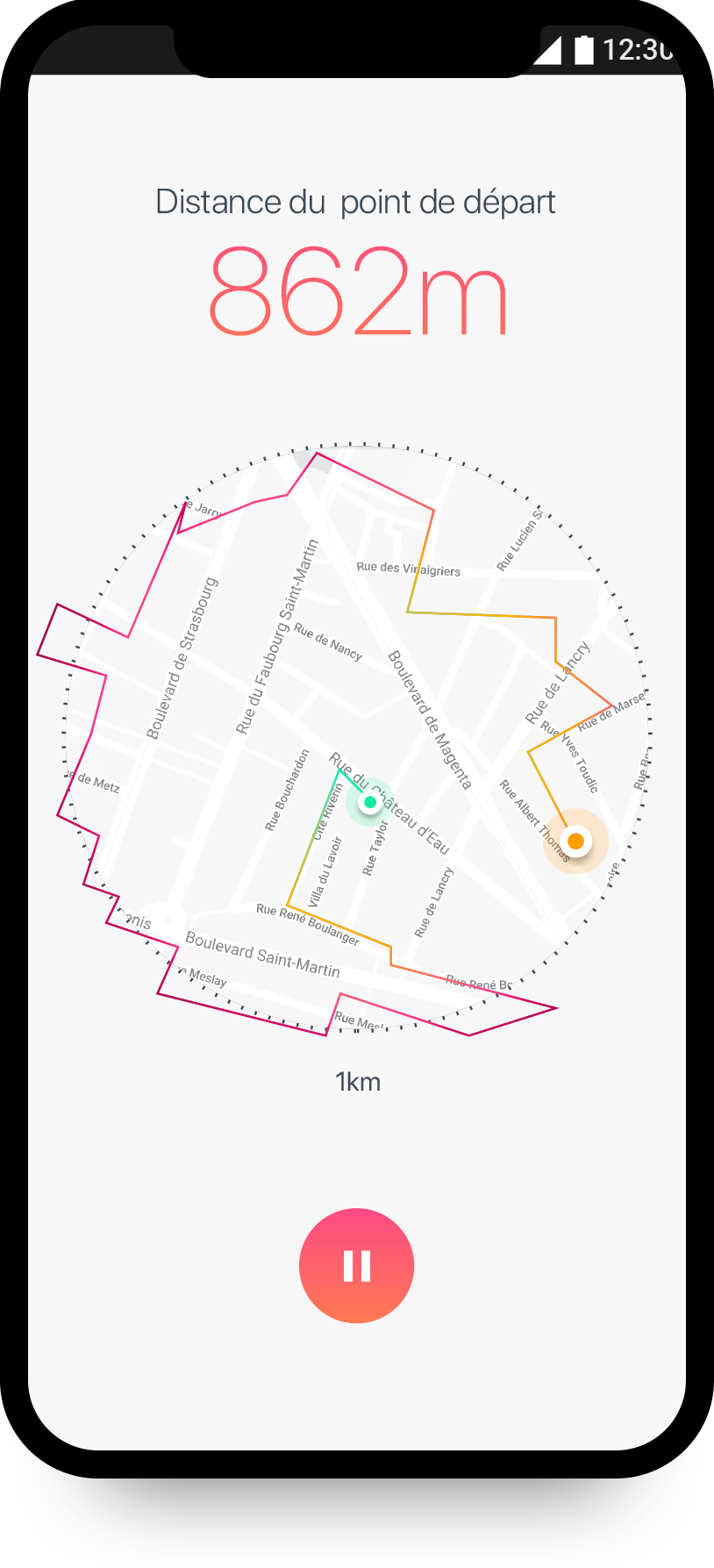

Browsers like Atlas, Comet or Dia, can now read, sum up and even interact with web pages. Is front-end accessibility still relevant in 2025?

The question

Browsers are changing. They’re no longer just rendering machines that display what developers build. They are starting to interpret. Some can already scan a page, recognise what each element does, and even click on your behalf. A new kind of browsing is emerging where you might delegate the act of navigating entirely to a machine.

That raises a question that needs asking

If AI-native browsers can “see” and “understand” our pages, do we still need to make front-ends accessible?

Accessibility 101

For my non technical friends, web accessibility simply means designing websites so that everyone can use them. That includes people with visual, auditory, motor, or cognitive impairments. It covers readable text contrast, clear navigation, captions on videos, and support for screen readers or keyboard-only navigation. According to the World Health Organization, roughly 16% of the world’s population rely on these features to access information, work, and communicate online. And yet, less than 3% of websites meet basic accessibility standards.

Accessibility on business context

As a product manager, I’ve been guilty of delaying accessibility work more times than I’d like to admit. I pushed those tasks to the next sprint, or the one after that, because something else felt more urgent. It’s easy to convince yourself you’ll circle back later, but 'later' rarely comes. Only after seeing how those decisions affect real people did I understand what those trade‑offs really mean and even then continue to delay some of this.

After years of delaying this work, I can’t help but feel a strange optimism when I think about where the web might be headed. If AI can describe an image more precisely than alt text, infer a button’s purpose from its context, and navigate without a keyboard, it could mean the web is finally evolving toward a world where inclusion is built in, not patched on later.

It’s a tempting story. But it’s also partially wrong.

How AI browsers see

AI browsers don’t truly understand—they guess, they approximate. They look at the DOM, the layout, the copy, and they guess what each part means. Most of the time, they’ll be right. Sometimes, they’ll be confidently wrong.

That’s why accessibility still matters. Not only because humans continue to need it. It’s also because accessibility is the clearest, most reliable way to express intent.

A properly labelled button tells a screen reader what to say. It also tells a machine what the element is for. An aria-label that says “Subscribe to newsletter” isn’t just helpful for someone using assistive tech. It’s a semantic anchor for an AI browser deciding which button to click.

In this sense, accessibility has always been about structure and meaning. We used to think of those as human concerns. Now, they’re machine concerns too.

Two layers of accessibility

The web is quietly becoming two layers deep: one for humans and one for agents. The human layer is what we see and touch: the colours, motion, typography, and layout. The agent layer is invisible but equally real. It’s the semantic structure, intent metadata, and predictable patterns that intelligent browsers rely on to make sense of it all.

When we design clearly for humans, we make it easier for machines to navigate. And when we build clear semantics for machines, we make it easier for humans who depend on assistive technologies.

The two goals aren’t in conflict. They’ve always been aligned. We just didn’t have to think about it much until now.

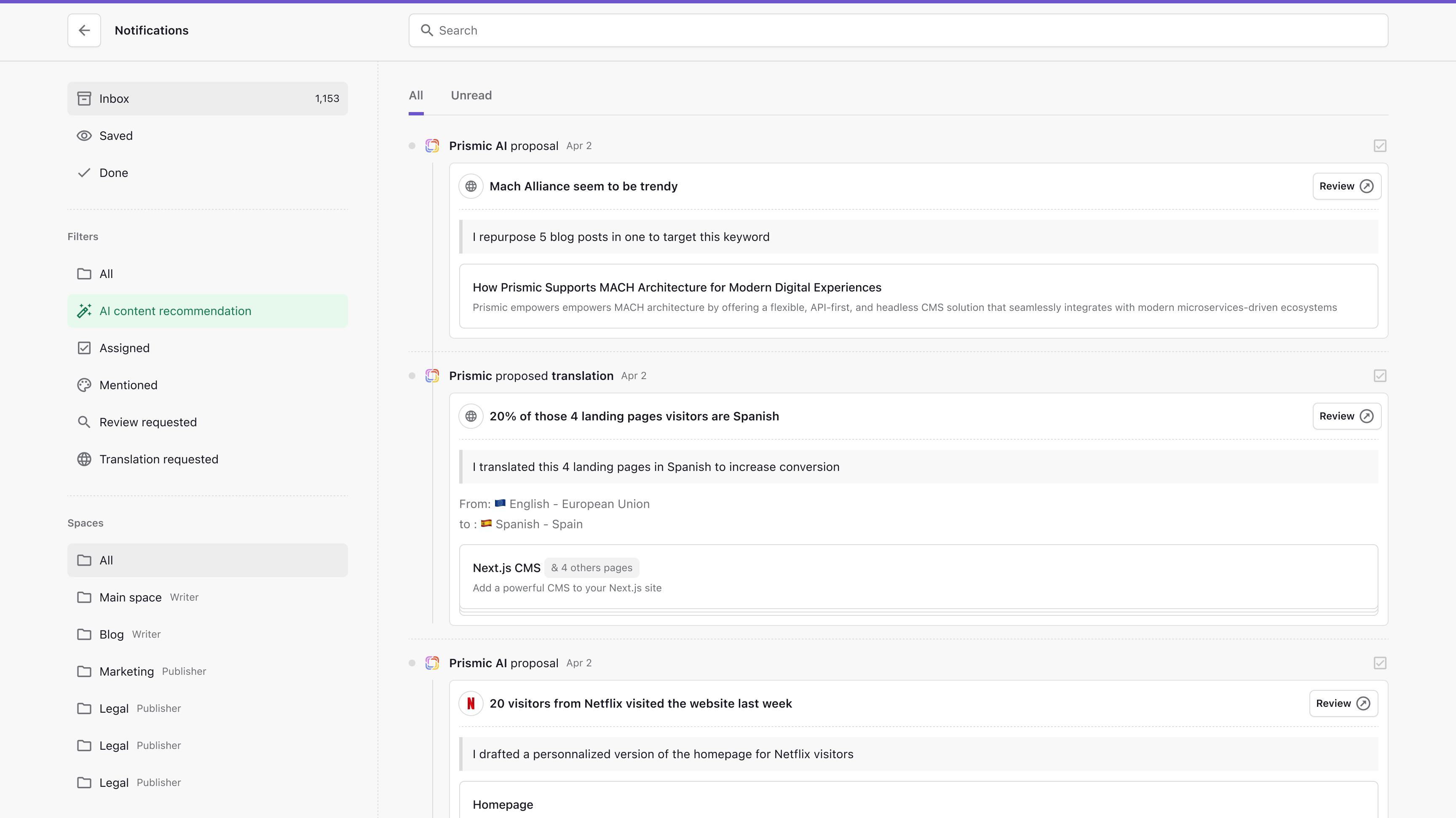

And here’s the irony. The biggest push for accessibility may not come from accessibility advocates at all. It will come from companies chasing visibility.

The future ?

When a growing share of traffic flows through AI-native browsers that summarise content, answer queries, or even shop autonomously, businesses will have no choice but to optimise for them. They’ll make their sites easier for machines to parse, label actions more clearly, and clean up messy interfaces. Not because it’s the right thing to do, but because it’s the profitable thing to do.

At the same time, these AI agents / browsers will continue to evolve. Their builders have just as much incentive to make sense of the messy, inconsistent web as the companies trying to be found on it. They’ll get better at detecting intent, inferring meaning, and correcting human design mistakes. Both sides—businesses and browser makers—will push in the same direction: a web that’s more structured, more semantic, and more understandable.

That shared effort, even if driven by commercial incentives, will end up benefiting everyone.

Because when everyone builds for intelligent browsers or for agents that rely on structure and clarity, and when those browsers become smarter at understanding intent, we get a web that’s finally easier for people who’ve always needed those same features.

The web might become more inclusive not because of empathy or regulation, but because incentives on both sides finally align.

A slightly cynical path, but a genuinely hopeful outcome.

I'm trying to build good articles about AI with AI. Find the prompt here